Time complexity of the SVM (Support Vector Machine) Algorithm

What is the Training-time complexity of SVM (Support Vector Machine)

The time complexity of the SVM training algorithm is primarily affected by the choice of the optimization algorithm used during training. The most commonly used optimization algorithms are the Sequential Minimal Optimization (SMO) and the gradient-based methods such as the LIBSVM.

In general, the time complexity of a kernel SVM can be described as follows:

Sequential Minimal Optimization (SMO): O(n^2 * k) to O(n^3 * k)

LIBSVM: O(n^2 * k) to O(n^3 * k)

Here, 'n' is the number of training instances, and 'k' is the number of iterations required for convergence.

The time complexity of a kernel SVM can also be affected by the specific kernel used. For instance, linear kernels have a lower time complexity compared to more complex kernels, such as Gaussian or polynomial kernels. The time complexity of SVMs using linear kernels can be reduced by using techniques like linear programming, which has a time complexity of O(n * d), where 'd' is the number of features.

To further clarify, let's consider two examples:

Linear Kernel SVM

Suppose you have a dataset with 1,000 training instances and 100 features. Using a linear kernel SVM, the time complexity for training the model would be O(n * d) = O(1,000 * 100) = O(100,000).

Gaussian Kernel SVM

For the same dataset, if you use a Gaussian kernel SVM with SMO optimization, the time complexity for training the model would be between O(n^2 * k) and O(n^3 * k). Assuming the number of iterations 'k' required for convergence is 10, the time complexity would range between O(1,000^2 * 10) = O(10,000,000) and O(1,000^3 * 10) = O(10,000,000,000).

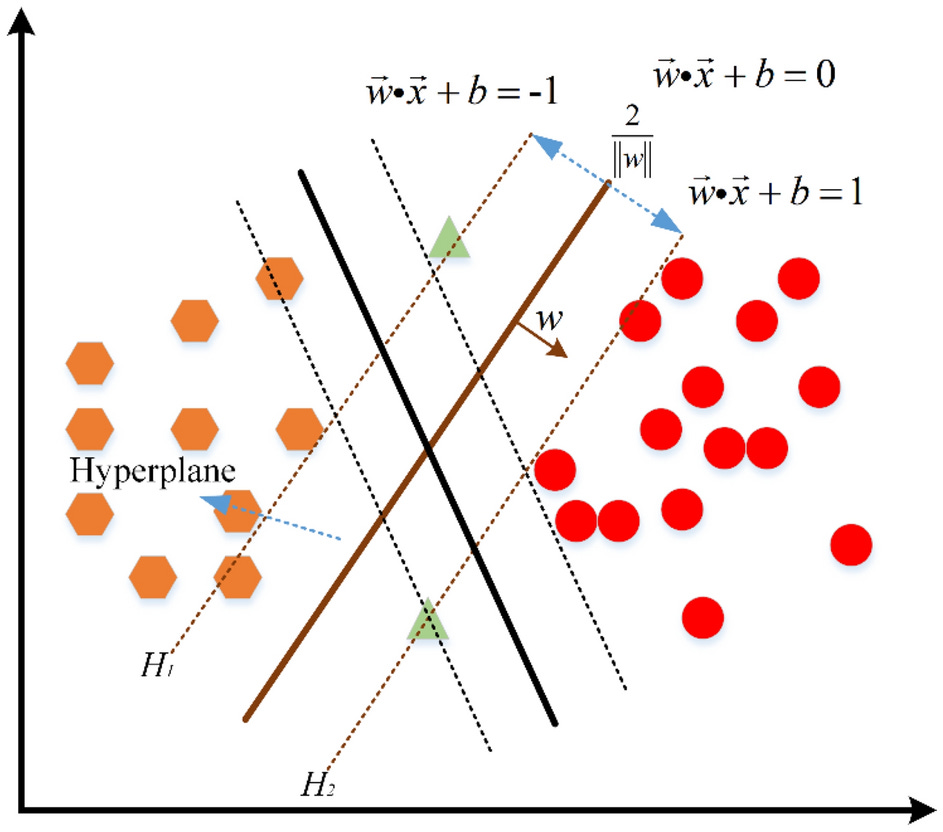

In practice, kernel SVMs are well-suited for solving small to moderately-sized problems, especially when the data is not linearly separable, and the feature space needs to be transformed using a kernel function. However, for large-scale problems with millions of data points, kernel SVMs may not be the most computationally efficient choice. In such cases, linear SVMs, decision trees, or deep learning techniques can be considered, depending on the specific problem and data characteristics.

What is the run-time complexity of SVM (Support Vector Machine)

In general, the runtime complexity of a kernel SVM for making predictions can be described as follows:

Runtime complexity: O(m * s * f)

Here, 'm' is the number of instances for which predictions are to be made, 's' is the number of support vectors, and 'f' is the complexity of computing the kernel function.

Let's break down each component:

Number of instances (m): The number of instances for which you need to make predictions. As the number of instances increases, the time taken to make predictions will increase linearly.

Number of support vectors (s): Support vectors are the critical data points that define the decision boundary. These data points have a direct impact on the SVM's runtime complexity. A larger number of support vectors will result in higher runtime complexity, as each prediction requires computing the kernel function between the test instance and each support vector.

Complexity of the kernel function (f): The kernel function's complexity will also affect the runtime complexity. For instance, linear kernels generally have a lower complexity compared to more complex kernels such as Gaussian or polynomial kernels.

To further illustrate the concept, let's consider an example:

Suppose you have a trained kernel SVM with 200 support vectors and are using a Gaussian kernel. You need to make predictions for 500 instances.

In this case, the runtime complexity would be O(m * s * f) = O(500 * 200 * f). The complexity 'f' depends on the specific kernel function. Assuming the Gaussian kernel computation takes 'c' operations, the overall runtime complexity would be O(500 * 200 * c).

In conclusion, the runtime complexity of kernel SVMs depends on the number of instances for which predictions are to be made, the number of support vectors, and the complexity of the kernel function. When using kernel SVMs for making predictions, it's crucial to consider the trade-offs between accuracy and computational efficiency, especially for large-scale applications or real-time prediction tasks.