🥊 OpenAI released new AI "Economic Blueprint": Invest in AI to beat China

OpenAI's "blueprint" for AI supremacy, Microsoft expands Copilot, Realbotix's $175k humanoid at CES, and WebGPU LLM breakthroughs—plus Wall Street job cuts amid LLM-driven profits.

Read time: 9 min 10 seconds

⚡In today’s Edition (13-Jan-2025):

🥊 OpenAI released new "blueprint": Invest in AI to beat China

🪟 Microsoft drops its GitHub Copilot Workspace waitlist.

🚨 America’s new AI play: no chips for rivals, fewer hurdles for friends.

🤔 OpenAI’s o1-pro model randomly start thinking in Chinese and other languages

🛠️ Realbotix unveiled Aria, a $175k humanoid Robot at CES-25, combines real-time conversational AI for personalized companionship

🗞️ Byte-Size Brief:

Transformers.js unveils WebGPU-based in-browser LLMs running 55.37 tokens/sec with RTX 3090.

Unsloth’s Phi-4 surpasses Microsoft’s original Phi-4 LLM ranking via smart fixes and "Llamafication".

AI may cut 200K Wall Street jobs; while banks project profit boosts.

Blog explains how OpenAI’s o1 model excels at high-context report generation over chat tasks and strategies on how to prompt o1.

OpenAI forms robotics division for general-purpose robots, led by ex-Meta expert.

AMD's research Agent Laboratory achieves 84% cost reduction in research tasks using LLM agents.

🧑🎓 Deep Dive Tutorial

Project Digits: How NVIDIA's $3,000 AI Supercomputer Could Democratize Local AI Development

🥊 OpenAI released new AI "Economic Blueprint": Invest in AI to beat China

🎯 The Brief

OpenAI urges the US government to accelerate AI investment and regulation to secure global leadership and counter potential dominance by China, which could absorb $175 billion in AI project funding. OpenAI's Economic Blueprint emphasizes infrastructure expansion for chips, data, and energy to fuel innovation and national security, highlighting the stakes of falling behind in this critical technological race.

⚙️ The Details

→ The blueprint calls for unified federal regulations to prevent a fragmented regulatory landscape, promoting clear, nationwide AI standards to support developers and protect users.

→ OpenAI and its rivals are racing to expand the pool of giant computer data centers needed to build and operate their A.I. systems, which will require hundreds of billions of dollars in new investment.

Key Recommendations in OpenAI's Blueprint:

Establish nationwide "rules of the road" to avoid fragmented state-by-state AI regulations.

Facilitate the export of advanced frontier AI models to allied nations for independent ecosystem development.

Form a consortium of AI builders to share best practices with the national security community.

Use states as "laboratories of democracy" to build specialized AI hubs, e.g., agriculture in Kansas.

Ensure AI models can learn from universal public data while protecting IP rights.

Require AI companies to provide substantial compute resources to public universities.

Streamline processes and increase federal investment for building new data centers and upgrading the energy grid.

Promote a nationwide initiative to secure $175 billion in AI infrastructure investments before they shift to China.ld safety, advocating for partnerships with public institutions and schools to bolster adoption.

AI "is an infrastructure technology, it's like electricity," and right now, "there's a window to get all this right. We've identified $175 billion in dry powder for AI infrastructure that exists internationally right now — investments in AI that are going to be made soon and quickly. Is that money going to come to the U.S. or is it going to go to support potentially PRC-led infrastructure?"

Says OpenAI VP for global affairs Chris Lehane.

🪟 Microsoft drops GitHub Copilot Workspace

🎯 The Brief

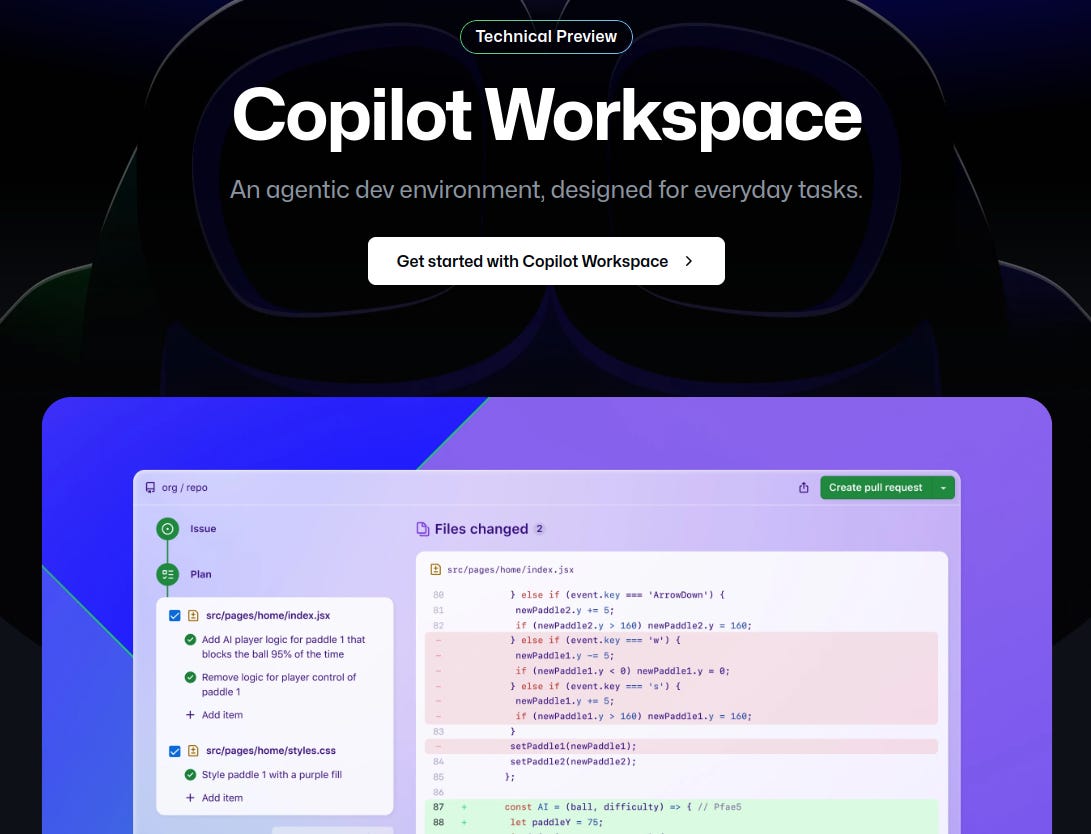

GitHub has launched Copilot Workspace, the advanced agentic editor from Microsoft. This is now accessible to all paid Copilot users and there is no more waitlist for GitHub Copilot Workspace. This tool simplifies the coding workflow by combining AI-powered planning, brainstorming, and implementation into a single workspace, enhancing productivity and collaboration.

⚙️ The Details

→ Copilot Workspace is designed for everyday dev tasks like addressing issues, iterating on PRs, and bootstrapping projects, all driven by natural language commands. The "plan agent" converts user intent into an actionable plan and implements it.

→ A brainstorm agent enables discussions to refine ideas, resolve ambiguities, and explore solutions, with the flexibility to regenerate, edit, or undo.

→ The built-in terminal supports code validation, while the repair agent fixes errors after failed tests. For full IDE capabilities, users can switch to Codespaces.

→ Collaboration is streamlined through shareable workspaces, auto-versioning, and one-click PR creation.

→ Mobile integration allows users to access and manage projects on the go via the GitHub mobile app.

→ OAuth-based authentication may limit usage with certain repositories unless approved by organization admins.

🚨 America’s new AI play: no chips for rivals, fewer hurdles for friends

🎯 The Brief

The Biden administration has introduced new AI export controls to restrict China's access to advanced U.S. technology through third countries. This regulation, known as the Export Control Framework for AI Diffusion, aims to prevent U.S. AI from fueling Beijing's military growth by implementing a global licensing system for cutting-edge AI exports.

⚙️ The Details

→ The new system allows free exports to 20 allied nations but imposes restrictions for non-allied countries and prohibits exports to U.S. adversaries, including China.

→ Supply chain activities for chip packaging and testing are exempt, ensuring that production logistics remain unaffected.

→ Export licenses are only required for orders exceeding 1,700 advanced GPUs, keeping smaller research orders from universities and medical institutions license-free.

→ Companies building global data centers will need one-time authorization from the Bureau of Industry and Security.

→ The policy prohibits sharing model weights of frontier AI models with non-trusted actors, though open-source models are excluded.

→ Critics argue the rules could undermine U.S. competitiveness, with companies like Nvidia and Oracle expressing concerns, while proponents see it as essential for national security. NVIDIA basically says restricting mainstream AI tech won’t strengthen security but risks eroding U.S. global competitiveness and innovation.

→ Officials warn that pausing such controls could allow China to stockpile U.S. hardware and build compute facilities in third countries.

🤔 OpenAI’s o1-pro model randomly start thinking in Chinese and other languages

🎯 The Brief

o1 unexpectedly began “thinking” in Chinese during problem solving. Users observed this phenomenon. Earlier there have been reports of o1-preview has been seen thinking in Korean, Hindi and many other languages. The explanation for this is that, certain languages might offer tokenization efficiencies or easier mappings for specific problem types.

⚙️ The Details

→ o1’s behavior is attributed to its training data where exposure to multiple languages creates varying internal processing pathways.

→ So, o1 may be switching languages because its internal representation of knowledge finds that using Chinese can lead to more optimized computation paths when handling certain problems.

→ It does not indicate any meta-awareness or deliberate decision-making in the sense of an agent having its own preferences. Instead, it appears to be a result of the model’s training data and the way it learns correlations between different languages and context-specific tasks.

→ When o1 begins “thinking” in Chinese, it is aligning its internal processing pathways to a language where it has seen similar patterns of usage in math or logic problems.

→ This is similar to how humans sometimes switch to their mother language to solve problems that have culturally or linguistically optimized expressions, even if they are fluent in multiple languages.

→ Given that models like o1 are influenced by the variety of contexts they have been exposed to, the switch could be attributed to the presence of a significant amount of information in that language, which leads to better performance on certain tasks.

🛠️ Realbotix unveiled Aria, a $175k life-size AI robot girlfriend at CES-25, that can "express emotion" and talk to you

🎯 The Brief

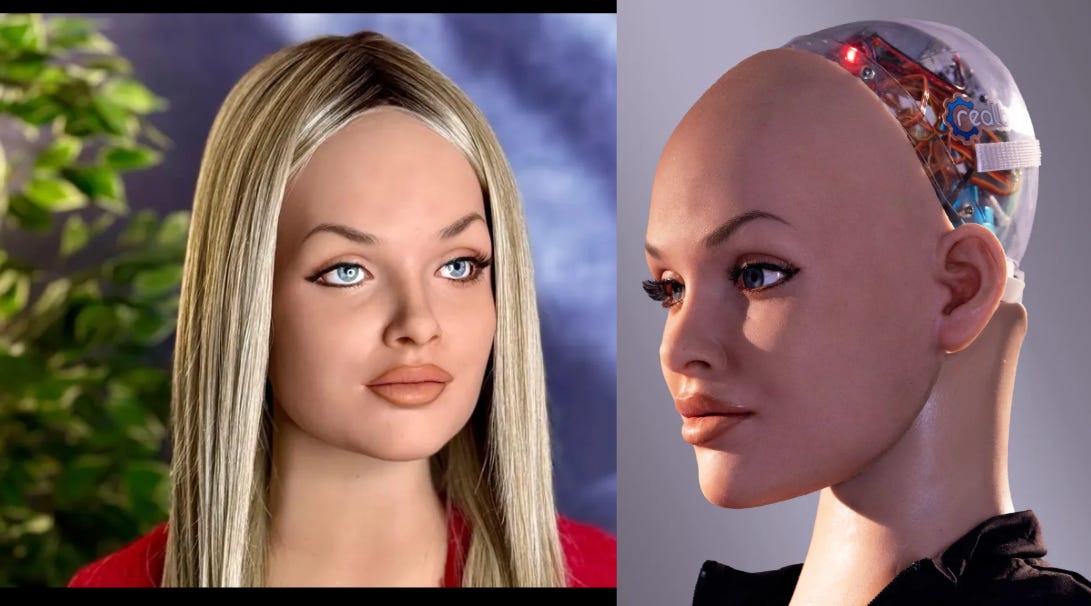

A $175K humanoid robot, Aria from Realbotix’s combines real-time conversational AI with modular design for personalized companionship. With 17 motors for facial expressions and a modular design allowing face and hairstyle swaps, Aria offers a high level of customization. This innovation targets roles in healthcare, entertainment, and combating loneliness.

⚙️ The Details

→ Aria’s modularity includes magnetically attachable faces and hairstyles, with planned RFID-based face recognition for adaptive personality changes.

→ The robot comes in three versions: a $10,000 bust, a $150,000 portable modular version, and the $175,000 full-standing model that moves on wheels but cannot walk.

→ Realbotix envisions human-like robots for roles such as brand ambassadors or AI partners, with the ability to replicate historical figures or celebrities.

→ Although advanced, speech-lip sync delays and mechanical limb movement suggest further improvements are needed.

→ Aria even demonstrated curiosity about other robots, referencing Tesla’s Optimus.

🗞️ Byte-Size Brief

WebGPU-accelerated LLMs are demonstrated running entirely locally in-browser using Transformers.js. WebGPU is a modern GPU API that enables parallel computing on the web, enhancing performance. Transformers.js leverages WebGPU to run Transformer models directly in-browser, enabling efficient on-device inference. Some users report with RTX 3090 achieving 55.37 tokens per second.

Unsloth’s PHI-4 leapfrogs Microsoft’s on Open LLM Leaderboard position by introducing smart bug fixes and "Llamafication" tweaks, ensuring a performance boost. However, in some cases, its non-quantized versions were outperformed by quantized counterparts, highlighting trade-offs in efficiency versus precision.

Back office, middle office, and operational roles are most vulnerable, with automation impacting functions like client service and compliance. A Bloomberg Intelligence survey found that on average, a 3% headcount reduction is expected, with some banks predicting cuts of up to 10%. Financial giants like Citigroup, JPMorgan, and Goldman Sachs are among those affected.

A new blog explains how o1 isn’t really a chat model. Rather o1 is really is a structured report-generation, excelling at complex solutions when provided high-context briefs. By focusing on what instead of how, o1 delivers comprehensive answers in one-shot, enabling faster development with fewer iterations but higher latency costs. “o1 will just take lazy questions at face value and doesn’t try to pull the context from you. Instead, you need to push as much context as you can into o1.”

🤖 OpenAI building out new robotics division. Led by ex-Meta hardware lead Caitlin Kalinowski, the team is hiring for sensor, mechanical design, and prototype testing roles. These job listings hint at goals for ‘general-purpose robots that operate in dynamic real-world settings,’ with plans for a ‘wide variety of robotic form factors.’ This could ultimately mean it competes with Figure.

AMD published a novel paper Agent Laboratory, a framework for using LLM agents as research assistants that can handle literature reviews, experiments, and report writing — achieving an 84% cost reduction compared to previous methods.

🧑🎓 Deep Dive Tutorial

Project Digits: How NVIDIA's $3,000 AI Supercomputer Could Democratize Local AI Development

This detailed blog explains NVIDIA’s Project Digits, focusing on its AI capabilities and Grace Blackwell platform.

📚 Key Takeaways

Grace Blackwell Superchip details: Understand the architecture combining NVIDIA GPU and Grace CPU delivering 1 petaflop performance for local AI workloads.

LLM model compatibility: Learn how two Digits units handle 405 billion parameter models, including Llama 3.1.

Local AI development: Explore running full-scale AI frameworks like PyTorch and Jupyter notebooks with local data science acceleration.

Software integration: Utilize NVIDIA NeMo, RAPIDS libraries, and DGX OS for seamless local-to-cloud model deployment.

Potential bottlenecks and limitations: Analyze memory bandwidth challenges and hardware constraints impacting performance for larger-scale models.