Total Read time: 5 minutes 20 seconds

📚 Browse past editions here.

( I write daily for my 112K+ AI-pro audience, with 4.5M+ weekly views. Noise-free, actionable, applied-AI developments only).

🥉 Elon Musk unveils Grok 3 and 'Deep Search' tool

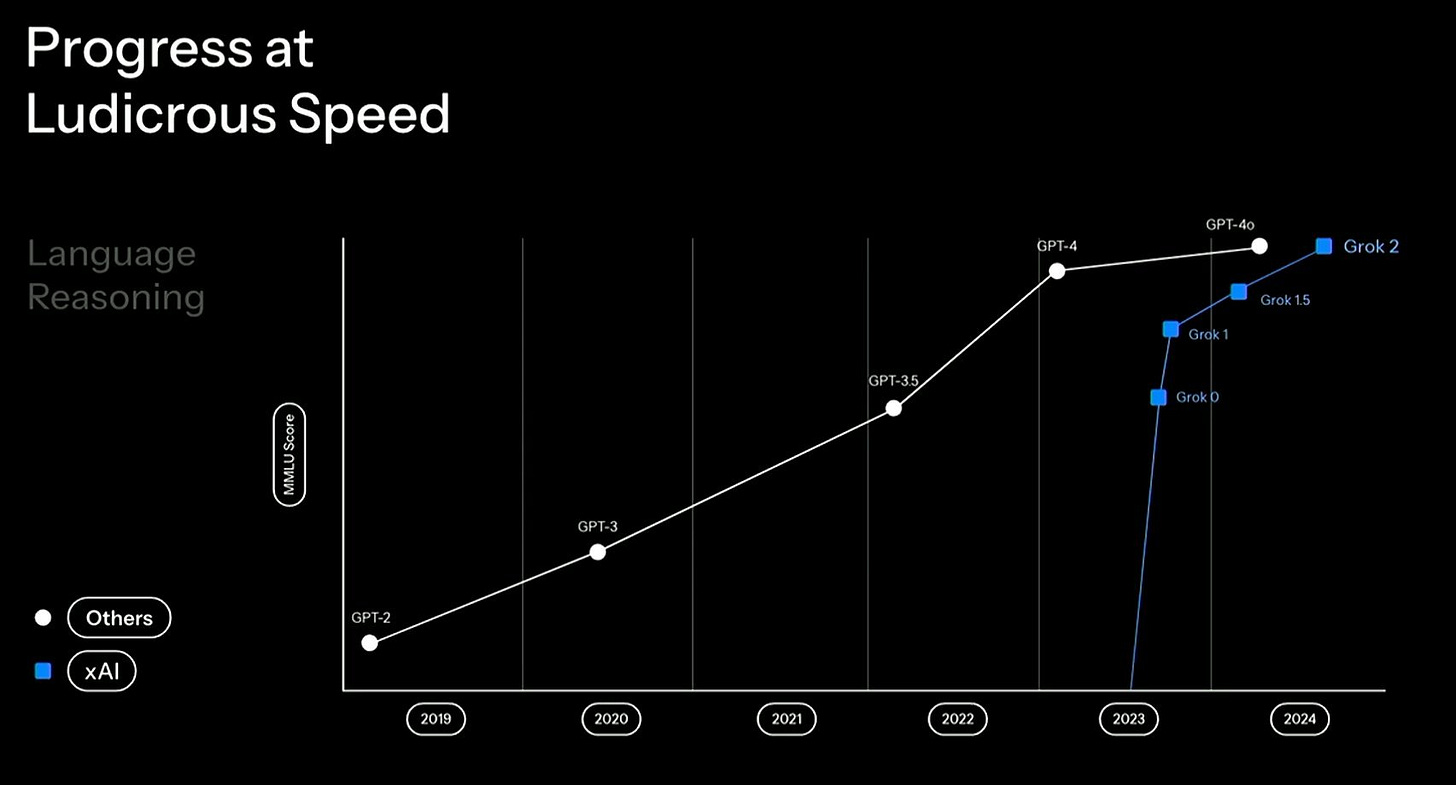

xAI has been using an enormous data center in Memphis containing around 200,000 GPUs to train Grok 3. So it was trained with “10x” more computing power than its predecessor, Grok 2.

Key Takeaways:

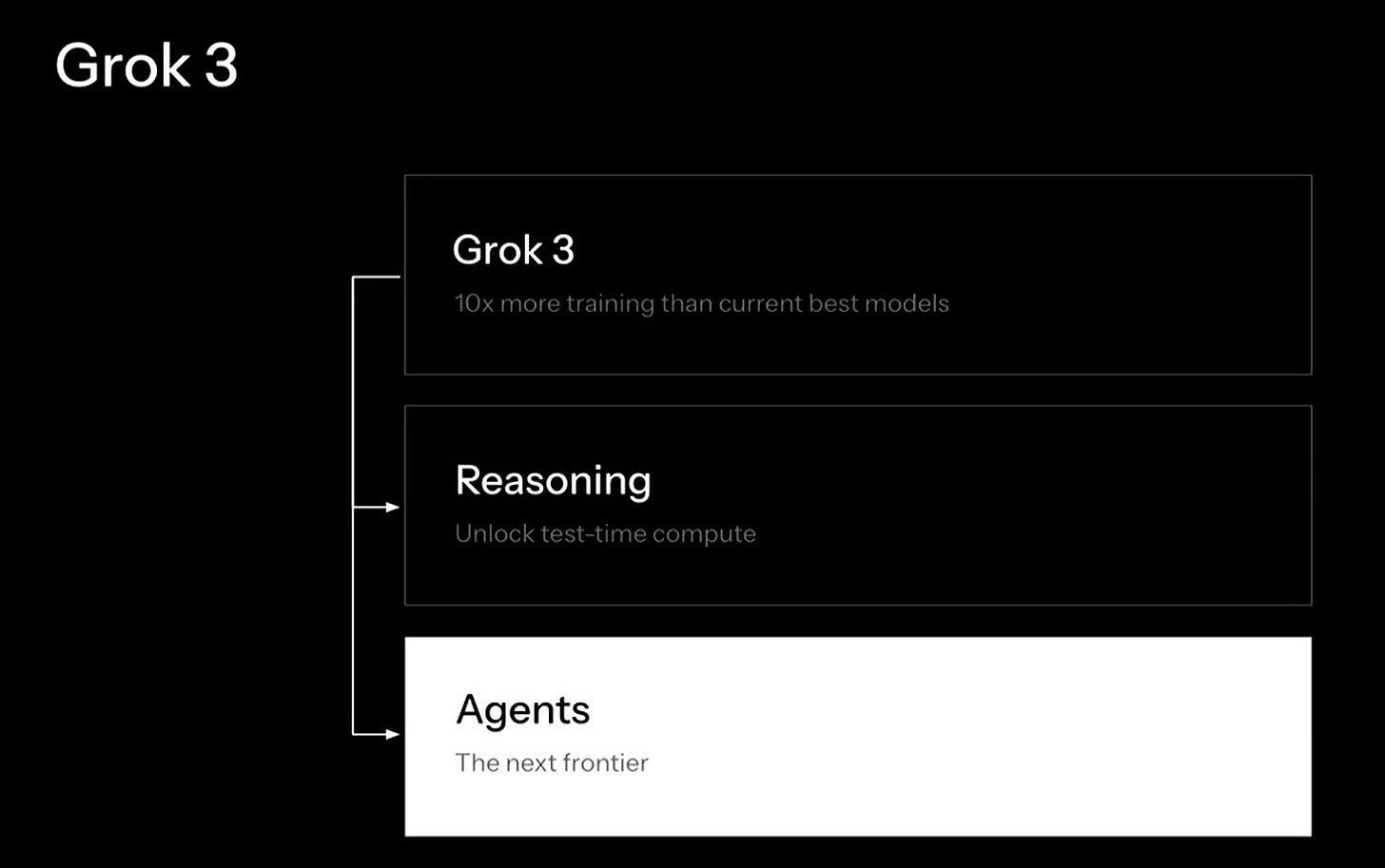

📌 Grok 3 base model, reasoning models and Deep Search are being rolled out to the Grok website and app from today.

📌 Apart from the base model, xAI also launched a two reasoning model Grok 3 reasoning model (beta) and Grok 3 mini reasoning model.

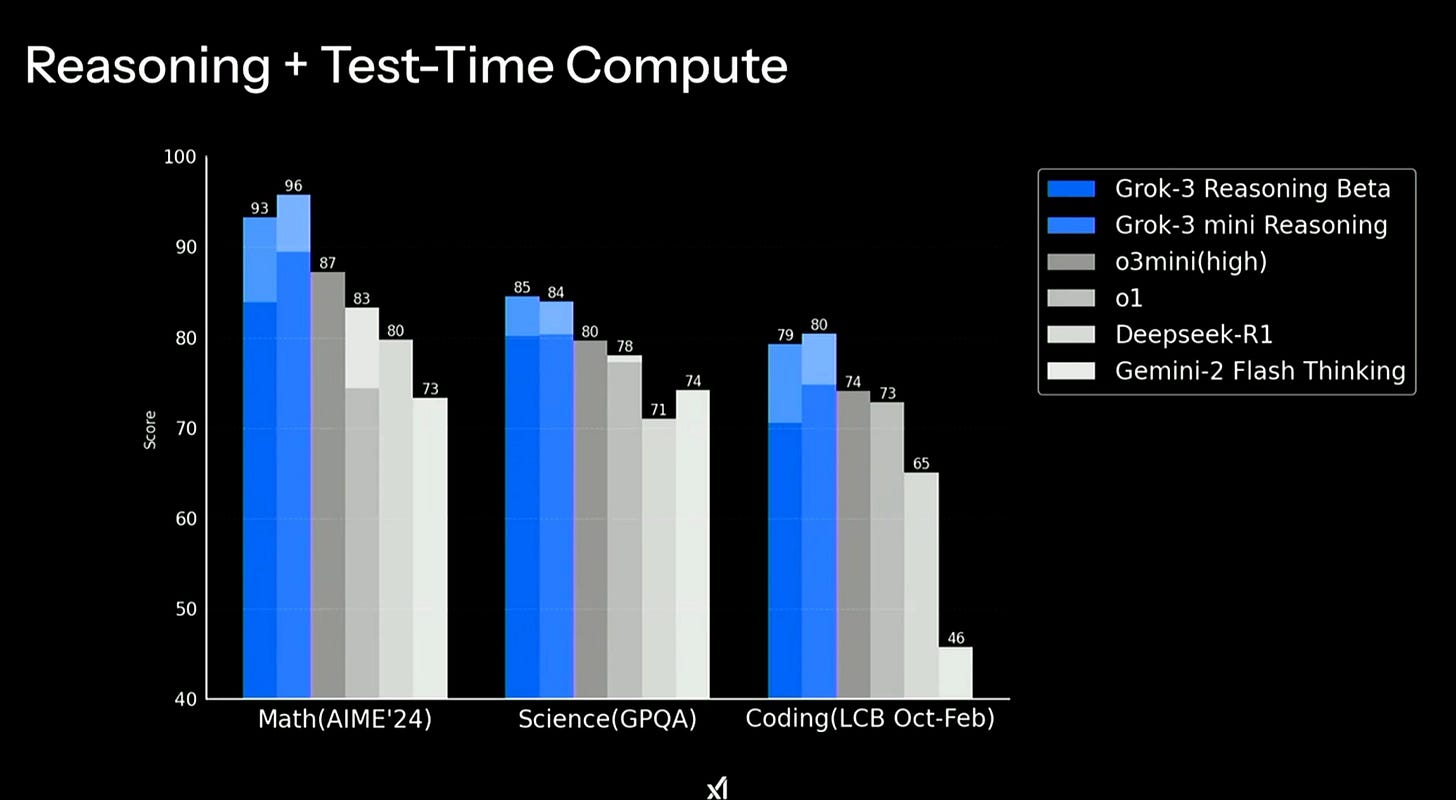

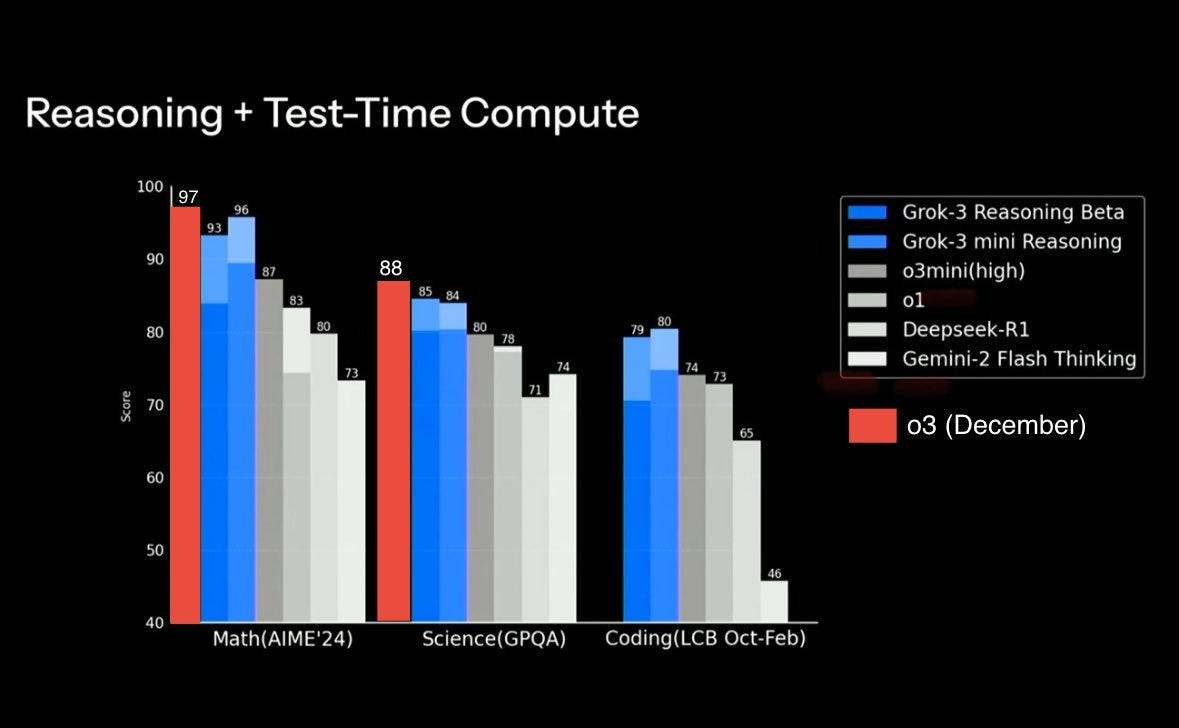

📌xAI claims that its new reasoning models perform better than OpenAI o1, o3 Mini (High), Gemini Flash thinking and DeepSeek R1 models in Maths, Science and coding benchmarks.

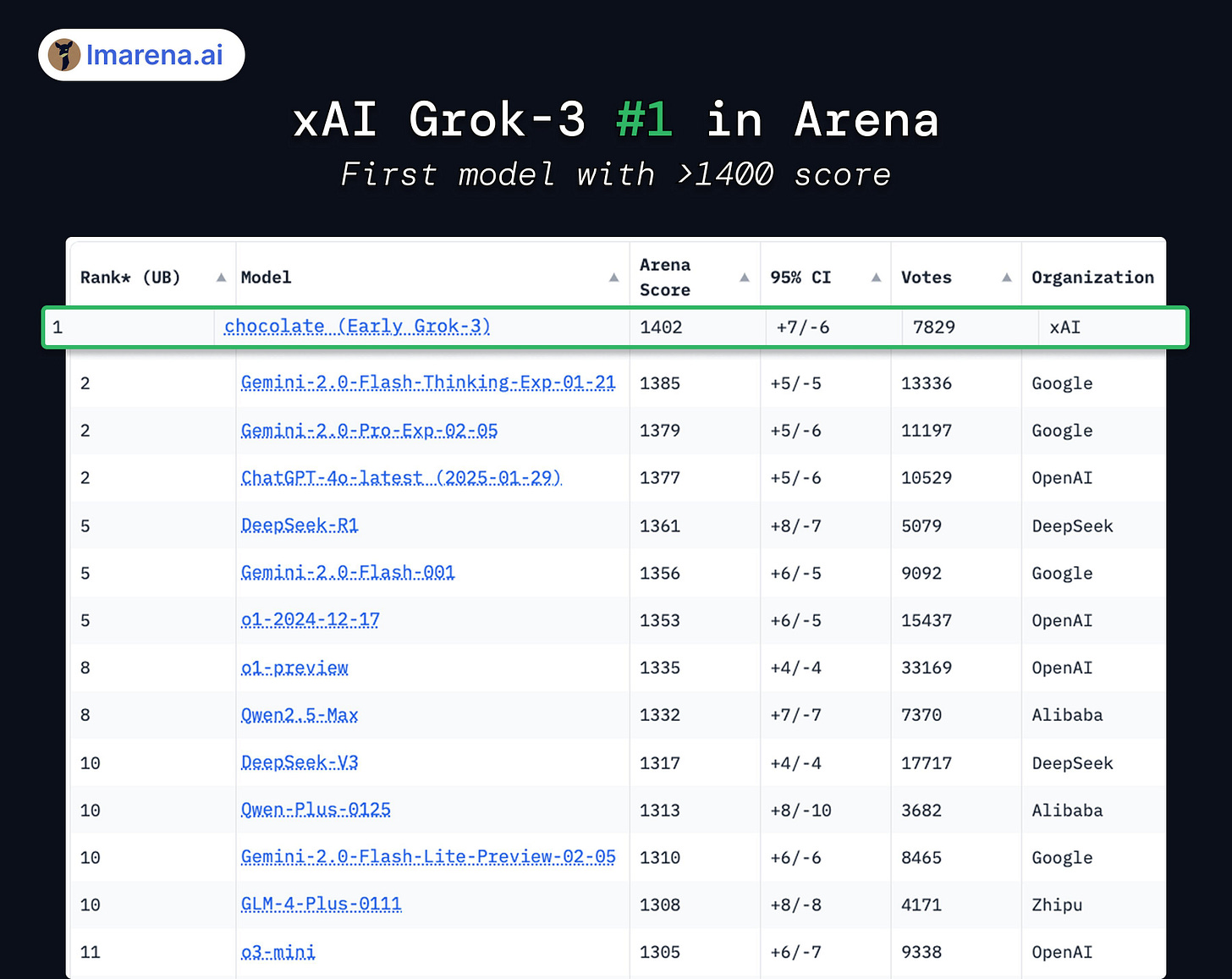

📌 1400 ELO score on LMArena, Ranked 1 now

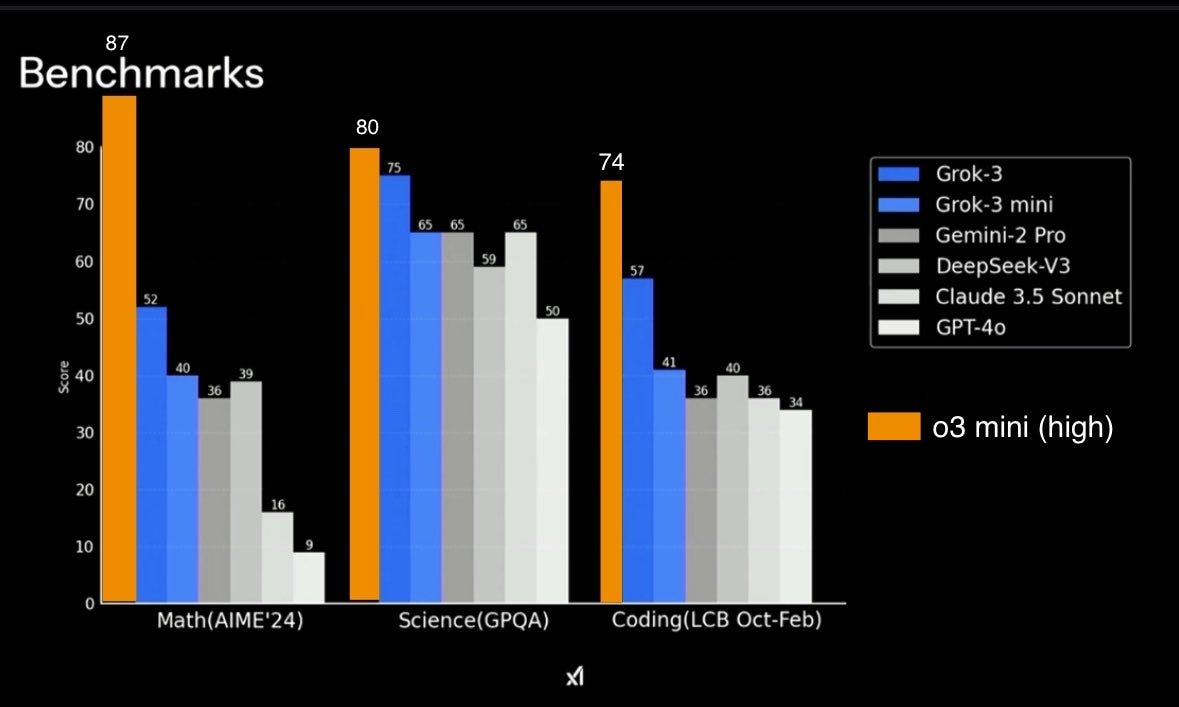

📌 AIME 24 — 52% [96% with reasoning!]

📌 GPQA —75% [85% with reasoning]

📌 Coding (LiveCodeBench) — 57% [80% with reasoning]

Availability of Grok-3

Subscribers to X’s Premium+ tier ($50 per month) will get access to Grok 3 first, and other features will be gated behind a new plan that xAI’s calling SuperGrok.

Priced at $30 per month or $300 per year (if leaks are to be believed), SuperGrok unlocks additional reasoning and DeepSearch queries, and throws in unlimited image generation.

Grok 3 also comes with a companion system called Deep Search, intended as a research companion that draws upon a large internet-scale index. It aims to provide factual answers along with relevant references. First impressions suggest that Deep Search can handle many of the same tasks as equivalent research agents from OpenAI and Perplexity, though users have occasionally caught it citing non-existent sources or surfacing somewhat uncertain claims. This indicates that while the retrieval capabilities are powerful, they are not entirely free of hallucinated results—an ongoing area of improvement for large language models in general.

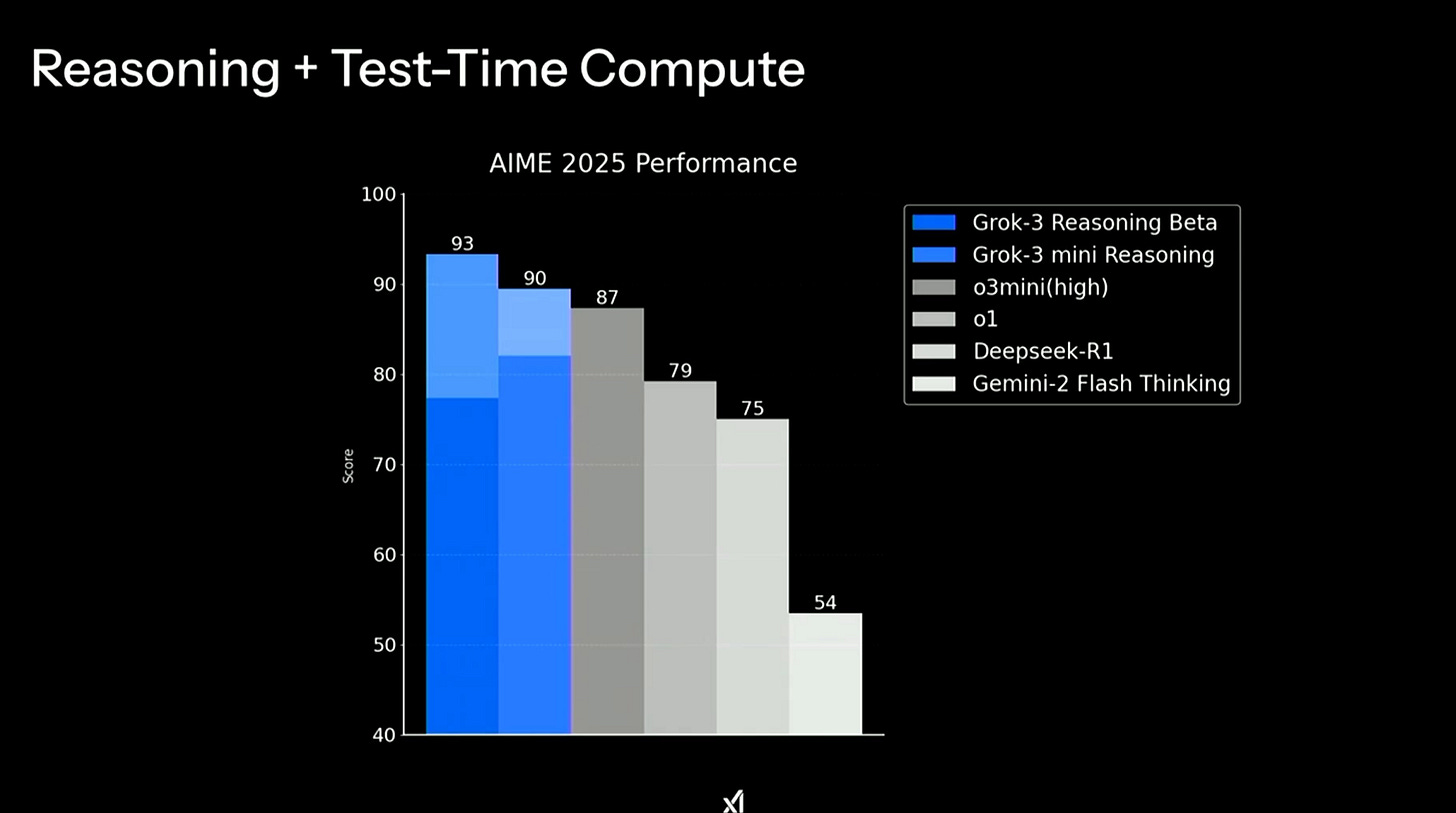

Grok 3 outperforms o3-mini-high, o1, and DeepSeek R1 in AIME 2025 benchmarks.

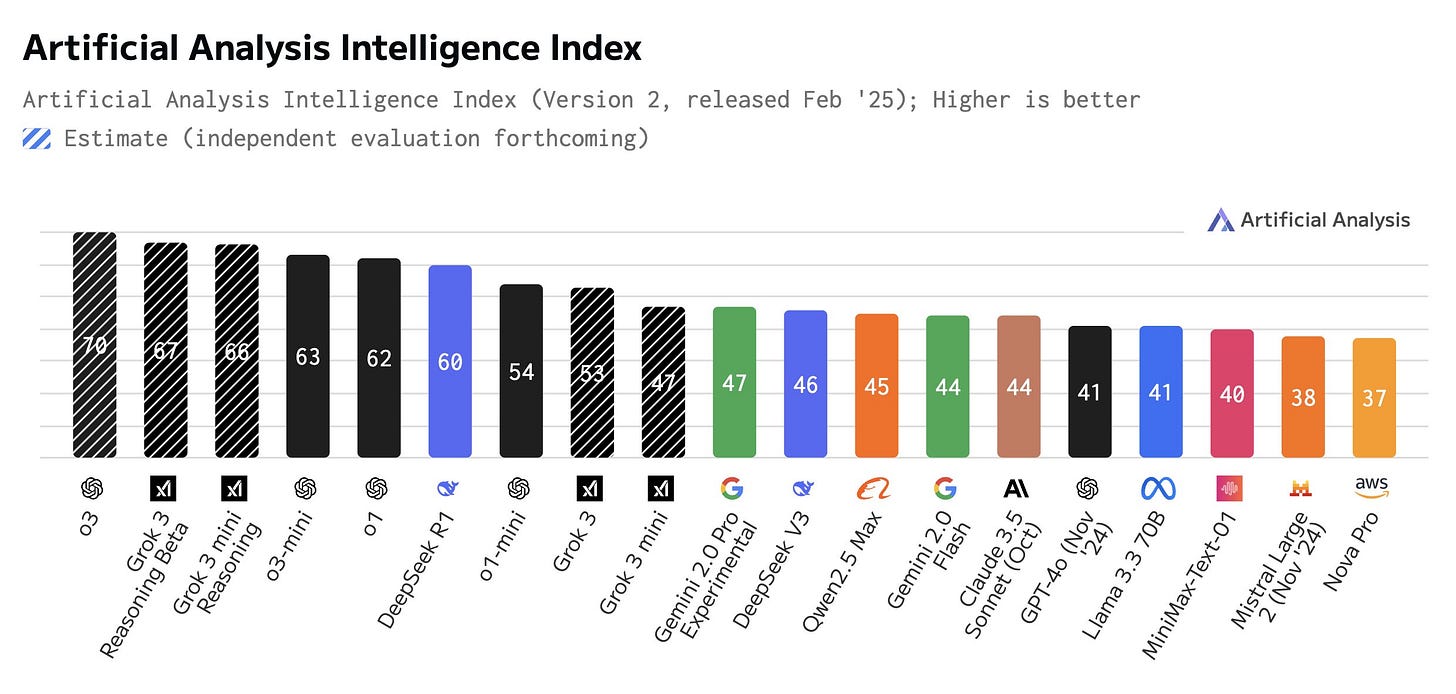

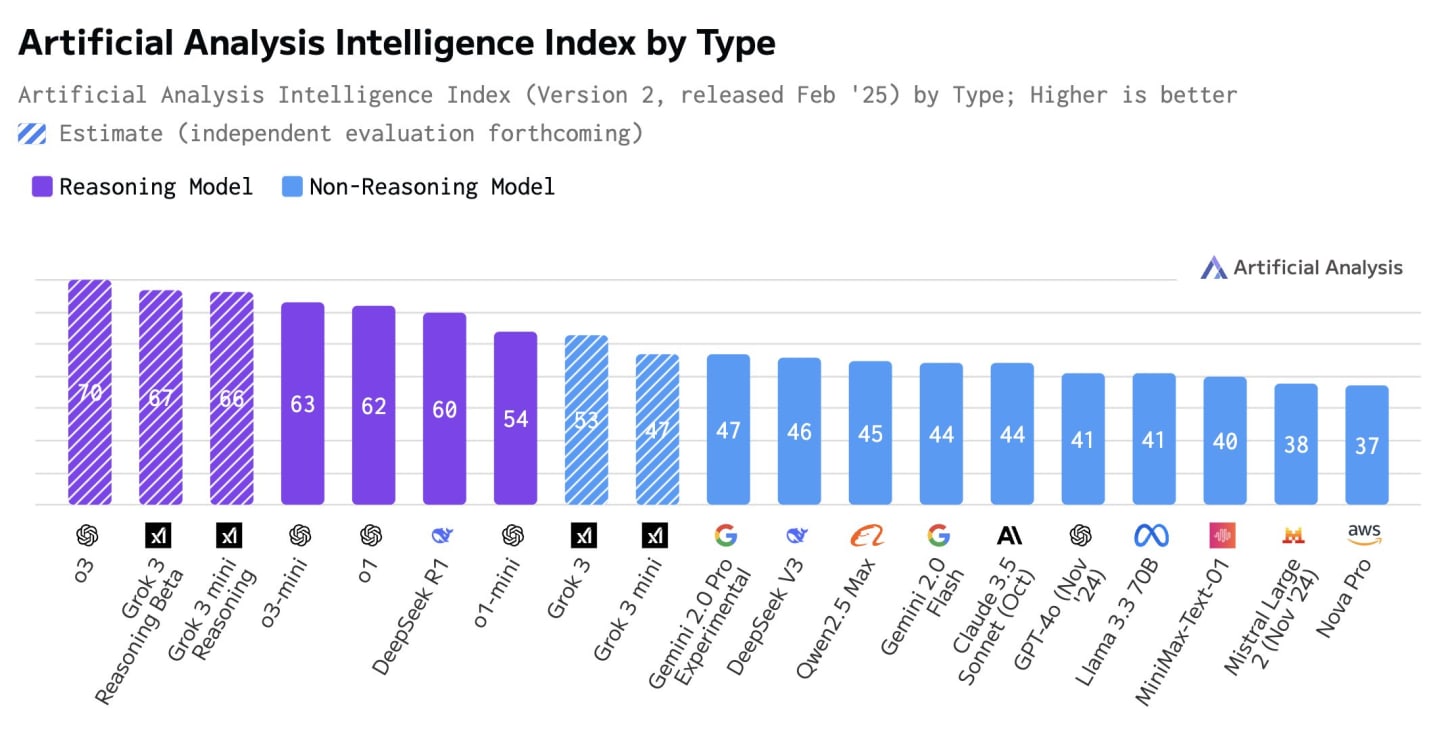

Artificial Analysis published its benchmark numbers on Grok 3

Accroding to them “Grok 3 Reasoning likely beats o3-mini and DeepSeek R1 to claim the mantle of the world’s leading reasoning model, pending the release of OpenAI’s full o3 model (still several weeks away)”

While reasoning models clearly dominate the intelligence frontier, Grok 3 is now the leading non-reasoning model. Non-reasoning models are likely to remain in demand for more latency and cost sensitive workloads

Grok 3 is significantly more powerful than Grok 2, with an order of magnitude improvement in capability.

Early evaluations of Grok 3 in the Chatbot Arena (LMSYS) reveal it has achieved an unprecedented Elo score of 1400, a milestone no other model has reached.

(Grok 3 has been testing under alias "chocolate" as Early Grok 3.)

About Thinking Tokens of Grok-3

One of the more intriguing aspects of Grok 3 lies in its ability to generate step-by-step thinking traces. Although Elon Musk indicated that the underlying reasoning processes and the reasoning-tokens are hidden to prevent easy imitation, the availability of an interactive “Thinking” or “Big Brain” mode shows that Grok 3 can attempt challenging tasks by methodically walking through them before finalizing an answer. According to early testers, this approach allows it to tackle puzzles, advanced computations, and board game queries with a thoroughness that sometimes surpasses other top-tier models.

Grok 3 is proficient at generating creative coding.

Elon stressed its emergent creative potential. The Big Brain mode provides access to higher compute power and advanced reasoning

Beyond optimizing test-time compute, Grok 3 enables sophisticated agents. This has led to DeepSearch

xAI used a massive data center in Memphis to train Grok 3. This features about 200,000 GPUs.

The system became operational last September with 100,000 graphics processors from Nvidia. Dell supplied tens of thousands of GPU-equipped servers powering Colossus.

Following a $6 billion funding round in December, xAI announced that it plans to double the number of GPUs in Colossus to 200,000 chips. The company’s ultimate goal is to grow that number to one million.

However, the o3 Model from OpenAI still remains ahead.

The below chart includes the numbers for o3 along with Grok-3.

This chart has been tweeted by one person from OpenAI’s products team.

However, note, both Grok 3 and the full version of OpenAI’s o3 with Reasoning isn’t publicly available yet, so the below chart shows the the comparsions of models which are currently publicly available.

And this chart as well comes from the same person from OpenAI’s product team..

Andrej Karpathy’s evaluation of Grok 3

Perhaps the most striking demonstration of Grok 3’s potential came when Karpathy uploaded the GPT-2 paper and asked a series of lookup questions. The model answered these questions accurately, underscoring its capability to retrieve and process textual information. The real challenge, however, was to estimate the number of training FLOPs required for GPT-2. This task demanded a combination of lookup, estimation, and arithmetic reasoning: deducing token counts from text size, estimating the number of epochs, and finally computing the overall FLOPs based on model parameters. With its “Thinking” mode enabled, Grok 3 successfully navigated this multi-step calculation—a test that even the GPT-specific reasoning model (o1-pro) could not complete correctly.

Karpathy also noted that when asked about the Riemann hypothesis, Grok 3 attempted to engage with the problem rather than dismissing it outright. Although the model’s exploration of this famed unsolved problem did not yield a definitive conclusion—and was eventually halted—it was encouraging to see it take on such a complex challenge rather than opting for a quick refusal, as is common with many other systems.

In summary, Karpathy’s evaluation suggests that Grok 3, when leveraging its internal “Thinking” mechanism, operates at a level comparable to the best reasoning models available today. Its performance on tasks requiring detailed procedural reasoning—such as generating a game interface or performing multi-layered arithmetic estimations—signals a promising step forward, even as certain areas like complex decoding and generating nuanced puzzles still present challenges.

That’s a wrap for today, see you all tomorrow.